How to Track Which Features Are Actually Driving Your AI Costs

Solve the AI cost attribution problem by tracking costs at the feature and user level to optimize spend and improve profitability.

Your OpenAI bill just hit $50,000 for the month. Your CFO wants to know why. Your engineering team looks at the bill and… they have no idea. They can see the total, but they can’t tell you which features drove it, which users consumed the most, or which product areas are the cost culprits.

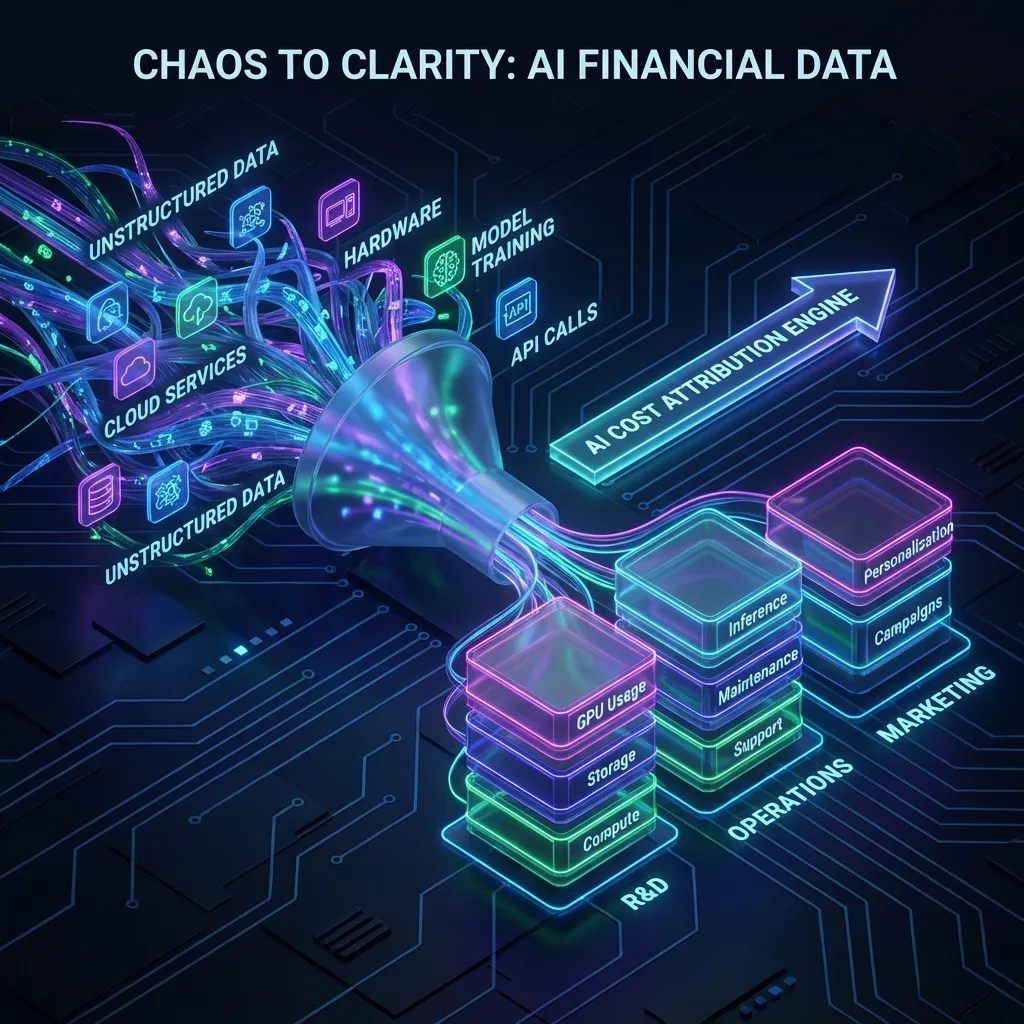

This is the cost attribution problem, and it’s one of the most frustrating challenges in AI cost management. Without granular attribution, you’re essentially managing your AI spend with a blindfold on. You know the total, but you have no idea what’s driving it or how to optimize it.

Why Attribution Is So Hard

In traditional software, cost attribution was relatively straightforward. You could look at your AWS bill and see costs broken down by service and resource. Database costs, compute costs, storage costs. You could tag resources and track spending by team or project. It wasn’t perfect, but it worked reasonably well.

AI costs are different. A single user action might trigger a cascade of API calls across multiple services. Your user asks a question in your chatbot. That triggers an OpenAI API call for the initial response. Then a Pinecone query for context retrieval. Then another OpenAI call with the augmented context. Maybe a tool call to an external API. Then a final OpenAI call to format the response.

Which feature does this cost belong to? The chatbot feature, obviously. But what if that same chatbot is used by multiple different features in your product? What if different user segments use it very differently? What if the cost varies wildly based on the complexity of the question? Suddenly attribution isn’t so simple.

The Multi Dimensional Attribution Challenge

Cost attribution in AI isn’t one dimensional. You need to track costs across multiple dimensions simultaneously, and each dimension tells you something different about your business.

You need feature level attribution. Which features in your product are expensive? Maybe your document summarization feature costs 10x more than your email drafting feature. Without knowing this, you can’t make informed product decisions. You might be investing heavily in a feature that’s bleeding money while neglecting a highly profitable one.

You need user level attribution. Which customers or user segments are driving costs? You might discover that enterprise customers use your product very differently than SMB customers. Maybe they’re making more complex queries, using features more intensively, or pushing your system in ways you didn’t anticipate. If you’re charging everyone the same price but costs vary by 10x, you have a pricing problem.

You need department or team level attribution. If you’re a B2B company, different teams within your customer organizations might use your product differently. The sales team might use it lightly for quick queries. The analytics team might be running heavy workloads. If you’re not tracking this, you can’t optimize for different use cases or create appropriate pricing tiers.

You need temporal attribution too. When do costs spike? Is it during business hours when users are most active? Is it during batch processing jobs that run overnight? Understanding the time dimension helps you optimize scheduling, implement rate limiting more intelligently, and plan capacity better.

The Power User Problem

One of the most common attribution challenges is identifying power users who are driving disproportionate costs. In a typical AI product, usage follows a power law distribution. 80% of your costs might be driven by 20% of your users. Or worse, 90% of costs driven by 10% of users.

These power users aren’t necessarily doing anything wrong. They might just be using your product exactly as intended, but more intensively than you expected. Maybe they discovered a workflow that involves making hundreds of API calls. Maybe they’re processing much larger documents than your typical user. Maybe they’re in a different industry that has legitimately higher usage needs.

Without user level cost attribution, you have no way to identify these patterns. You just see your total bill going up and have no idea why. With proper attribution, you can identify power users, understand their usage patterns, and make informed decisions about how to handle them.

Maybe you need to create a higher pricing tier for power users. Maybe you need to implement usage limits or rate limiting. Maybe you need to optimize the underlying prompts or workflows to make them more efficient. But you can only make these decisions if you know who the power users are and what they’re doing.

The New Feature Blindness

Here’s a scenario that plays out constantly. Your product team ships a new AI powered feature. They’re excited because early metrics show good adoption. Users love it. Then two weeks later, the bill comes and it’s 40% higher than expected. What happened?

Without feature level attribution, you’re doing forensics after the fact. You’re trying to figure out what changed, correlating deployment dates with cost spikes, guessing about what might have driven the increase. It’s time consuming, imprecise, and frustrating for everyone involved.

With proper attribution, you know immediately. You can see that the new feature is being adopted faster than expected, or that it’s more expensive per use than you modeled, or that users are using it in ways you didn’t anticipate. This gives you actionable information in real time, not weeks later.

Real time feature level attribution also enables you to make better product decisions. Maybe that new feature is expensive but drives huge customer value and retention. Then it’s worth the cost. Or maybe it’s expensive and users don’t really care about it. Then you should kill it or deprioritize it. But you can only make these judgment calls if you have the data.

The Engineering Team Attribution Gap

There’s often a disconnect between different engineering teams about AI costs. The team building the customer facing chat feature doesn’t think much about costs. They’re focused on user experience and functionality. But their decisions, like whether to use GPT 4 or GPT 3.5, or how to structure their prompts, have huge cost implications.

Meanwhile, the platform team sees the overall bill going up but doesn’t have visibility into which features or teams are driving it. They might try to implement cost cutting measures, but without attribution data, they’re making decisions blindly. They might optimize the wrong things or implement restrictions that hurt important features while leaving wasteful ones untouched.

Team level cost attribution solves this by creating accountability. Each team can see their contribution to overall AI spend. They can track their trends over time. They can set budget targets and monitor against them. This creates a culture of cost awareness without requiring centralized control.

The Failed Operation Black Hole

Here’s a cost category that almost nobody tracks properly: failed operations. Your LLM call times out and you retry it. That’s two calls worth of cost for zero user value. Your context retrieval pulls back too much data and you hit token limits, requiring you to make multiple calls. More wasted cost.

Failed operations can account for 10 to 20% of your AI spend, sometimes more. But because they don’t result in successful outputs, they often slip through the cracks of attribution systems. You’re paying for them, but you’re not tracking them, so you can’t optimize them.

Good attribution systems need to track not just successful operations but failed ones too. You need to know which features have high failure rates. Which types of requests are most likely to fail. Which error conditions are most expensive. This lets you prioritize engineering work on improving reliability where it matters most.

The Cross Platform Attribution Nightmare

Most AI companies don’t use just one AI service. They might use OpenAI for their main chat interface, Anthropic for document analysis, Pinecone for vector search, AWS for hosting their fine tuned models, and various other services for different parts of their stack.

Each of these services provides its own billing and usage data, but in different formats, with different levels of detail, and on different schedules. Piecing together a unified view of costs across all these platforms is a massive operational headache.

Without unified attribution, you’re looking at each service in isolation. You can’t see the true end to end cost of a user action that spans multiple services. You can’t compare costs across different parts of your product that use different underlying services. You’re stuck with siloed data that doesn’t give you a complete picture.

Building an Attribution System

The foundation of good attribution is tagging. Every API call, every operation, every cost generating action needs to be tagged with metadata. At minimum, you need tags for user ID, feature ID, and some kind of request or session ID that lets you trace related operations.

But tagging alone isn’t enough. You need infrastructure to collect, aggregate, and analyze this data. You need to join data from multiple sources. You need to handle the volume of data that comes from high throughput AI applications. And you need to present it in ways that are useful for different stakeholders.

Your engineering team needs detailed technical views showing costs by endpoint, model, and prompt type. Your product team needs views showing costs by feature and user segment. Your finance team needs views that align with how you think about the business and how you price your product. One attribution system needs to serve multiple use cases.

The timing of attribution data matters too. Monthly reports aren’t good enough. By the time you see the data, the opportunity to react is gone. You need near real time attribution so you can catch problems as they happen, not weeks later when the damage is done.

The Attribution ROI

Building a good attribution system requires investment. Engineering time to implement tagging. Infrastructure to collect and process the data. Tools to visualize and analyze it. But the ROI is massive if you’re spending serious money on AI.

I’ve seen companies reduce their AI costs by 30 to 40% just by implementing better attribution. Not through any technical optimization, but simply by understanding where the money was going and making informed decisions. They killed wasteful features, optimized expensive ones, implemented rate limiting for power users, and shifted resources toward high ROI activities.

Attribution also enables better pricing decisions. When you know the true cost of serving different customer segments, you can price accordingly. You can create usage tiers that actually reflect costs. You can identify which customers are profitable and which aren’t. This directly impacts your ability to build a sustainable business.

Perhaps most importantly, attribution changes the culture. When everyone can see costs and understand their impact, they make better decisions. Engineers think more carefully about their model choices. Product managers consider cost when prioritizing features. Finance can forecast more accurately. Everyone aligns around efficient growth rather than wasteful growth.

Getting Started

You don’t need to build the perfect attribution system on day one. Start simple. Pick one dimension that matters most to your business, maybe feature level attribution or user level attribution. Implement basic tagging for that dimension. Build simple dashboards that show trends over time.

Then iterate. Add more dimensions as you learn what questions you need to answer. Improve the granularity of your tagging. Add more sophisticated analysis capabilities. The key is to start getting some visibility now rather than waiting until you have the perfect system designed.

The companies that figure out cost attribution early will have a massive advantage. They’ll operate more efficiently, price more intelligently, and make better product decisions. The ones that keep flying blind will waste money, make poor choices, and struggle to maintain margins. In a competitive market, that difference compounds quickly.